Data analytics involves examining raw data to extract meaningful insights, subsequently guiding strategic decision-making. These insights play a pivotal role in determining optimal actions. Questions such as the ideal timing for a marketing campaign, the effectiveness of the current team structure, and the identification of customer segments likely to adopt a new product are addressed through data analytics. Data analytics is a cornerstone of successful business strategy. The procedure of converting raw data into actionable insights relies on a variety of methods and techniques employed by data analysts. The choice of these methods is influenced by the type of data under consideration and the specific insights sought. Data Science Course in Chennai equips individuals with the necessary skills to navigate through vast datasets and derive actionable insights that drive business decisions.

What is Data Analysis, and Why is it Important?

Data analysis is essentially the practice of uncovering valuable insights by examining data. This involves inspecting, cleaning, transforming, and modeling data using analytical and statistical tools, which will be delved into in more detail later in this article.

The significance of data analysis lies in its pivotal role in aiding organizations to make informed business decisions. In the modern business environment, companies consistently accumulate data using diverse methods including surveys, online tracking, marketing analytics, subscription and registration data (such as newsletters), and monitoring social media.

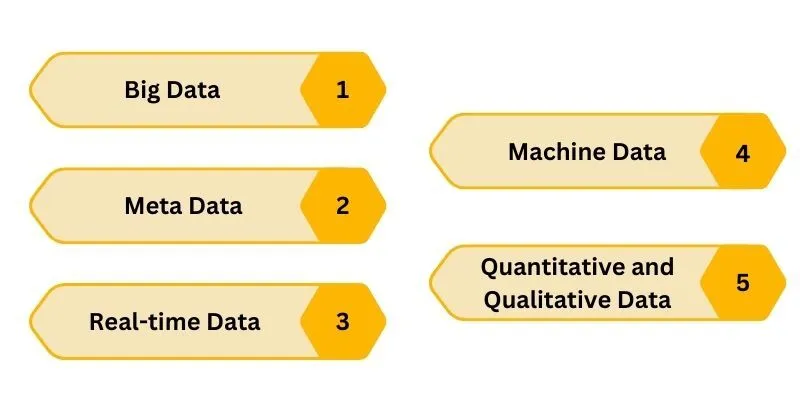

This collected data may manifest in diverse structures, encompassing, but not restricted to, the following:

Big Data

The concept of big data, referring to data that is so extensive, fast, or intricate that traditional processing methods struggle or become impractical, gained traction in the early 2000s. Doug Laney, an industry analyst, played a crucial role in establishing the mainstream definition of big data With the three characteristics: volume, velocity, and variety.

- Volume: Organizations continually amass vast amounts of data. In the recent past, storing such quantities of data would have posed significant challenges, but today, storage has become cost-effective and occupies minimal space.

- Velocity: The handling of received data is time-sensitive. With the proliferation of the Internet of Things (IoT), data may stream in incessantly and at an unprecedented speed, necessitating efficient processing and analysis.

- Variety: Data collected and stored by organizations manifests in diverse forms. This ranges from structured data—typical numerical information—to unstructured data, including emails, videos, audio, and more. The distinction between structured and unstructured data will be further explored in subsequent sections.

Meta Data

This refers to metadata, which is a type of data that offers insights into other data, such as an image. In practical terms, you can encounter metadata by, for instance, right-clicking on a file in a folder and choosing "Get Info." This action reveals details like file size, file type, creation date, and more.

Real-time Data

This is known as real-time data, which is information presented immediately upon acquisition. A notable example of real-time data is a stock market ticker, offering up-to-the-minute information on the most actively traded stocks.

Machine Data

This type of data is known as machine-generated data, which is produced entirely by machines without human instruction. An illustrative example of machine-generated data is the call logs automatically generated by your smartphone.

Quantitative and Qualitative Data

Quantitative data, also referred to as structured data, often takes the form of a "traditional" database, organized into rows and columns. On the other hand, qualitative data, known as unstructured data, encompasses types that do not neatly fit into rows and columns.

What is the Difference Between Quantitative and Qualitative Data?

The approach to analyzing data is contingent on the type of data under consideration—quantitative or qualitative. Here's a breakdown of the differences:

- Quantitative Data:

Quantitative data encompasses measurable values, represented by specific quantities and numbers. Examples include sales figures, email click-through rates, website visitor counts, and percentage revenue increases. Techniques for analyzing quantitative data revolve around statistical, mathematical, or numerical analysis, often applied to large datasets. This involves manipulating statistical data through computational techniques and algorithms. Quantitative analysis techniques are frequently employed to elucidate specific phenomena or make predictions.

- Qualitative Data:

In contrast, qualitative data cannot be objectively measured and is more susceptible to subjective interpretation. Instances of qualitative data include comments provided in response to a survey question, statements from interviews, social media posts, and text found in product reviews. Qualitative data analysis is centred on comprehending unstructured data, like the written text of spoken conversations. Frequently, qualitative analysis involves organizing data into themes, a process that can be facilitated through automation.

Data analysts engage with both quantitative and qualitative data, necessitating familiarity with a diverse array of analysis methods. The following section outlines some of the most valuable techniques in this regard.

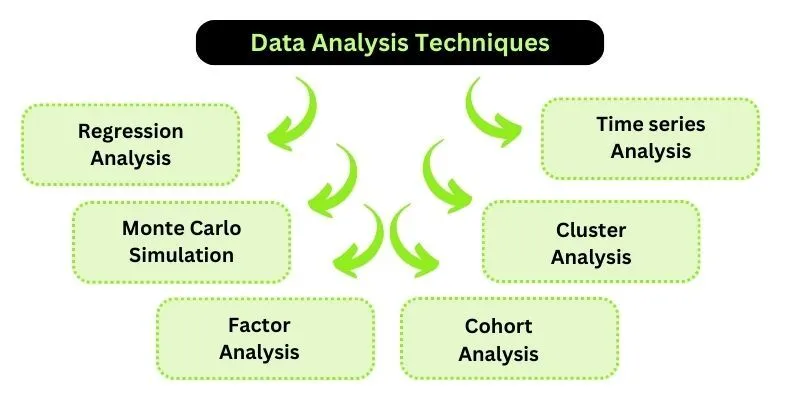

Data Analysis Techniques

Now that we are aware of a few different kinds of data, let's concentrate on the subject at hand: various approaches to data analysis.

- Regression Analysis: Unveiling Relationships and Predictions

Regression analysis, a potent statistical technique, is utilized to gauge the relationship between a set of variables. In essence, it seeks to explore whether there is a correlation between a dependent variable (the outcome or measure of interest) and one or more independent variables (factors that may influence the dependent variable). Its primary aim is to measure how specific variables might impact the dependent variable, enabling the identification of trends and patterns. This analytical tool proves particularly valuable for making predictions and forecasting future trends.

For example, consider an e-commerce company aiming to assess the correlation between (a) the expenditure on social media marketing and (b) sales revenue. Here, sales revenue serves as the dependent variable, representing the focal point for prediction and enhancement. Social media spend becomes the independent variable, and the objective is to ascertain its impact on sales—whether increasing, decreasing, or maintaining the current budget. Through regression analysis, the company can discern the relationship between these variables. A positive correlation would suggest that higher spending on social media marketing corresponds to increased sales revenue. Conversely, no correlation might imply that social media marketing has minimal impact on sales. Understanding this relationship aids informed decision-making regarding the social media budget.

However, it's crucial to note that regression analysis, on its own, cannot establish causation. If there's a positive correlation between expenditure on social media and sales revenue, it might imply an influence, definitive conclusions about cause and effect require additional considerations. The method indicates relationships but does not delve into the underlying causal mechanisms.

There exist various types of regression analysis, and the choice of model hinges on the nature of the dependent variable. If the dependent variable is continuous—measurable on a continuous scale like sales revenue in USD—a specific type of regression is employed. Conversely, if the dependent variable is categorical—comprising values categorizable into distinct groups based on certain characteristics like customer location by continent—a different regression analysis model is utilized.

regression analysis empowers businesses to unravel connections between variables, offering insights that guide predictions and strategic decisions. Its versatility in handling diverse types of data makes it a fundamental tool for understanding and leveraging relationships within datasets. For a more in-depth exploration of different regression analysis types and selecting the appropriate model, refer to our comprehensive guide.

- Monte Carlo Simulation

In decision-making processes, various outcomes are possible, each associated with its own set of consequences. Whether opting for the bus or choosing to walk, potential challenges like traffic delays or unexpected encounters shape our journey. While in everyday scenarios we may instinctively weigh pros and cons, high-stakes decisions demand a meticulous assessment of potential risks and rewards.

Monte Carlo simulation, alternatively referred to as the Monte Carlo method, is a computerized technique designed to model possible outcomes and their associated probability distributions. It systematically considers a spectrum of potential results and calculates the likelihood of each outcome. This method proves invaluable for data analysts engaged in advanced risk analysis, providing a robust framework to forecast future scenarios and inform decision-making.

So, how does Monte Carlo simulation operate, and what insights can it yield? The process begins with the establishment of a mathematical model of the data, often implemented through a spreadsheet. Within this model, one or more outputs of interest, such as profit or the number of sales, are identified. Additionally, there are inputs—variables that may impact the chosen output. For instance, if profit is the output, inputs might encompass variables like sales volume, total marketing expenditure, and employee salaries. In a scenario where the exact values of these inputs are uncertain, Monte Carlo simulation steps in to calculate all potential options and their respective probabilities.

Consider the question: How much profit can you expect if you achieve 1,00,000 sales and recruit five new employees earning $50,000 each? The Monte Carlo simulation tackles this by producing random samples from distributions specified by the user for uncertain variables. Through a series of calculations and recalculations, it models all conceivable outcomes and their probability distributions. This iterative process replaces deterministic values with functions that generate random samples, offering a comprehensive view of potential scenarios.

The versatility of the Monte Carlo method makes it one of the most favored techniques for assessing the impact of unpredictable variables on a specific output. This characteristic renders it particularly apt for risk analysis, where understanding the potential outcomes and their associated probabilities is paramount. By providing a nuanced perspective on the interplay between variables, Monte Carlo simulation equips decision-makers with the insights needed to navigate complex scenarios and make informed choices in the face of uncertainty.

- Factor Analysis

Factor analysis serves as a crucial technique in data analysis, specializing in the reduction of a large number of variables to a more manageable set of factors. The fundamental principle behind factor analysis lies in the belief that numerous observable variables are interrelated due to their association with an underlying construct. This method not only streamlines extensive datasets but also unveils concealed patterns, making it instrumental for exploring abstract concepts that aren't easily measured or observed, such as customer loyalty, satisfaction, wealth, happiness, or fitness.

Consider a scenario where a company aims to gain a deeper understanding of its customers. A comprehensive survey is distributed, featuring an array of questions covering sentiments towards the company and product, alongside inquiries about household income and spending preferences. The resulting dataset comprises a multitude of responses for each customer, offering insights into a hundred different aspects.

Rather than scrutinizing each response individually, factor analysis comes into play to organize them into factors that share commonality—an association with a single underlying construct. In this process, factor analysis identifies strong correlations, or covariance, among survey items. For instance, if a robust positive correlation exists between household income and the willingness to spend on skincare each month, these variables may be grouped together. Alongside other relevant variables, they may be condensed into a single factor, such as "consumer purchasing power." Similarly, if a perfect customer experience rating correlates with a positive response regarding the likelihood of recommending the product to a friend, these items may be amalgamated into a factor termed "customer satisfaction."

Ultimately, the outcome is a reduced number of factors rather than an overwhelming array of individual variables. These factors become the focal points for subsequent analysis, providing a consolidated perspective and facilitating a deeper understanding of the subject under investigation, whether it be customer behavior or any other area of interest. Factor analysis proves invaluable in distilling complexity, revealing meaningful patterns, and enabling more nuanced exploration of multifaceted datasets.

- Cohort Analysis

Cohort analysis, a vital data analytics technique, involves categorizing users based on a shared characteristic. Once users are grouped into cohorts, analysts can meticulously track their behavior over time, unveiling trends and patterns that enhance comprehension of customer dynamics.

To delve into the concept, a cohort signifies a collection of individuals who share a common characteristic or engage in a specific action during a defined time period. Examples include students enrolling in university in 2020, constituting the 2020 cohort, or customers making purchases from an online store app in December, forming another cohort.

With cohort analysis, the approach shifts from examining all customers in a singular moment to scrutinizing how specific groups evolve over time. Instead of isolated snapshots, the focus extends to understanding customer behavior within the broader context of the customer lifecycle. This dynamic perspective enables the identification of behavior patterns at different stages, from the initial website visit to email newsletter sign-up, and through to the first purchase.

The utility of cohort analysis lies in its capacity to empower companies to tailor their services to distinct customer segments. Consider initiating a 50% discount campaign to attract new customers. Through cohort analysis, you can monitor the purchasing behavior of this specific group over time, gauging whether they make subsequent purchases and how frequently. This nuanced understanding enables companies to strategize the timing of follow-up discount offers or retargeting ads on social media, enhancing the overall customer experience.

- Cluster Analysis

Cluster analysis, an exploratory technique, serves the purpose of identifying inherent structures within a dataset. The primary objective is to categorize diverse data points into groups, commonly known as clusters, which exhibit internal homogeneity and external heterogeneity. Put simply, data points within a cluster share similarities with each other but vary from those in other clusters. The utility of clustering lies in gaining insights into the distribution of data within a dataset, often serving as a preprocessing step for subsequent algorithms.

The applications of cluster analysis span various domains. In marketing, it is frequently employed to segment a large customer base into distinct groups, facilitating a more focused and tailored approach to advertising and communication. Insurance companies leverage cluster analysis to delve into the factors contributing to a high number of claims in specific locations. Geology utilizes cluster analysis to assess earthquake risks in different cities, aiding in the implementation of protective measures.

It's crucial to acknowledge that while cluster analysis can reveal structures within the data, it does not provide explanations for the existence of these structures. Nevertheless, it serves as a valuable initial step in comprehending the inherent patterns in the data, paving the way for more in-depth and focused analysis.

- Time Series Analysis

Time series analysis stands as a statistical technique employed to unveil trends and cycles over time. Time series data, a sequential collection of data points measuring the same variable at distinct time intervals (e.g., weekly sales figures or monthly email sign-ups), serves as the foundation for this analysis. By scrutinizing time-related trends, analysts gain the ability to forecast potential fluctuations in the variable of interest.

In the realm of time series analysis, key patterns under consideration include:

Trends: Stable, linear increases or decreases persisting over an extended period.

Seasonality: Predictable fluctuations within the data attributed to seasonal factors over shorter time spans. An illustration could be the recurring peak in swimwear sales during the summer months every year.

Cyclic Patterns: Unpredictable cycles causing data fluctuations. Unlike seasonality, cyclic trends are not tied to specific seasons but may stem from economic or industry-related conditions.

The capability to make informed predictions about the future is highly valuable for businesses. Time series analysis and forecasting find applications in diverse industries, prominently in stock market analysis, economic forecasting, and sales projection. Various types of time series models are available, categorized into autoregressive (AR), integrated (I), and moving average (MA) models. The choice of a particular model relies on the characteristics of the data and the intended predictive results.

For a comprehensive understanding of time series analysis, please refer to our detailed guide.

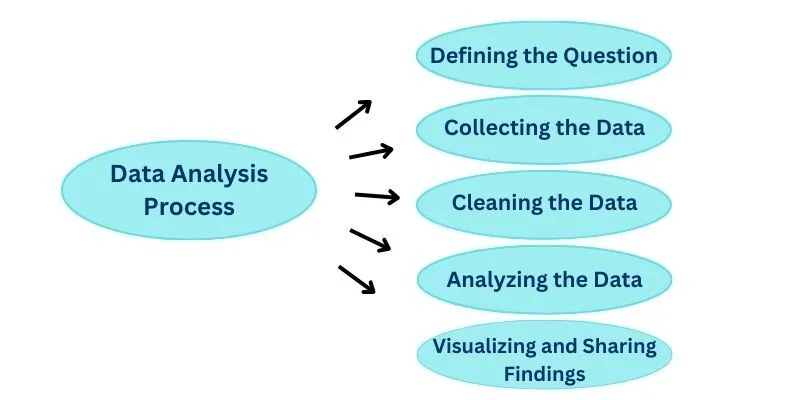

The Data Analysis Process

To derive meaningful insights from data, data analysts engage in a meticulous step-by-step process. While a detailed guide comprehensively covers this journey, here's a concise summary of the key phases in the data analysis process:

- Defining the Question

Initiating the process involves crafting a problem statement or objective, framing a question pertinent to the business problem at hand.

Determining the relevant data sources essential for addressing the defined question is a crucial component of this phase.

- Collecting the Data

Once the objective is clear, strategizing the collection and aggregation of pertinent data comes into play.

Decisions about using quantitative (numeric) or qualitative (descriptive) data and identifying their classification as first-party, second-party, or third-party data are critical considerations.

Learn more: Quantitative versus Qualitative Data: Understanding the Contrast?

- Cleaning the Data

Regrettably, collected data isn't immediately ready for analysis; a meticulous data-cleaning process is imperative.

This phase, often time-intensive, involves tasks such as removing errors, duplicates, and outliers, restructuring data, and filling in significant data gaps.

- Analyzing the Data

With clean data in hand, the focus shifts to analysis. Various methods, as outlined earlier, can be applied based on the specific objective.

Analysis may fall into descriptive (identifying past occurrences), diagnostic (understanding the reasons behind events), predictive (forecasting future trends), or prescriptive (offering recommendations) categories.

- Visualizing and Sharing Findings

The culmination of the process involves translating insights into visualizations for effective communication.

Data visualization tools like Google Charts or Tableau are often employed to present findings, facilitating comprehension and decision-making.

As the data analysis journey unfolds, each phase is crucial in extracting valuable insights and informing strategic decisions.

The Best Tools for Data Analysis

Navigating through each phase of the data analysis process demands a versatile toolkit for data analysts. Here's a concise list of top-notch tools that enable analysts to derive valuable insights from data.

- Microsoft Excel

A fundamental spreadsheet tool widely used for data organization, manipulation, and analysis.

- Python

Python stands out as a flexible programming language celebrated for its array of data analysis libraries, including Pandas and NumPy.

- R

A statistical programming language with comprehensive packages for data analysis and visualization.

- Jupyter Notebook

An open-source tool facilitating interactive computing, ideal for creating and sharing documents containing live code, equations, visualizations, and narrative text.

- Apache Spark

A distributed data processing framework capable of handling large-scale data processing tasks efficiently.

- SAS

A software suite offering advanced analytics, business intelligence, and data management solutions.

- Microsoft Power BI

A business analytics tool that converts data into interactive visualizations and insights.

- Tableau

A leading data visualization tool enabling users to create interactive and shareable dashboards.

- KNIME

An open-source platform utilizing visual programming for data analytics, reporting, and integration.

Each of these tools has a crucial role in improving the capabilities of data analysts, providing them with the means to analyze, visualize, and communicate insights from diverse datasets effectively.